If you are thinking the title of this blog post sounds like an Erle Stanley Gardner book, that is where the inspiration comes from. Gardner wrote the books about Perry Mason and his cases that were the basis for the Raymond Burr television series. Sometimes as DBAs we wish we had a Perry Mason type figure to defend us, but we rarely ever get that.

In this story we’ll pretend that we have a defense attorney, because the prosecution is making a to put us in jail for life. Maybe it isn’t quite that extreme but for some people it feels that way. The prosecutor claims that the defendant (DBAs) can’t keep the systems running, and performing. Additionally time and time again when they make changes they are done correctly and cause further impact. For all these charges the prosecution seeks the strictest punishment in the land, life in jail (AKA automate the DBA and fire the person).

I know many people out there have been in this position before, I have. At first I challenged the thought of automation. “How can they automate this?”, “They are going to automate me out of a job!”, and “No computer can do what I do!” were some of the thoughts and statements I have made. The turning point for me came when I started seeing all the things that I couldn’t get done. Things like patching SQL Server, planning and executing migrations to newer versions of SQL Server, and sleeping at night because I would get paged out for something that is a 5 second fix and as I said at 3am “A monkey could do this, why am I getting paged?”

So a show of hands how many people have been in that place before? It is time to be realistic about what automation can do to help us and dispel the fear of it replacing us.

So why should we automate?

- Consistency

- Remove Human Error

- Reduce alerts

- Reduce repetitive tasks

- Improve reliability

- Sleep easier

What should be automated?

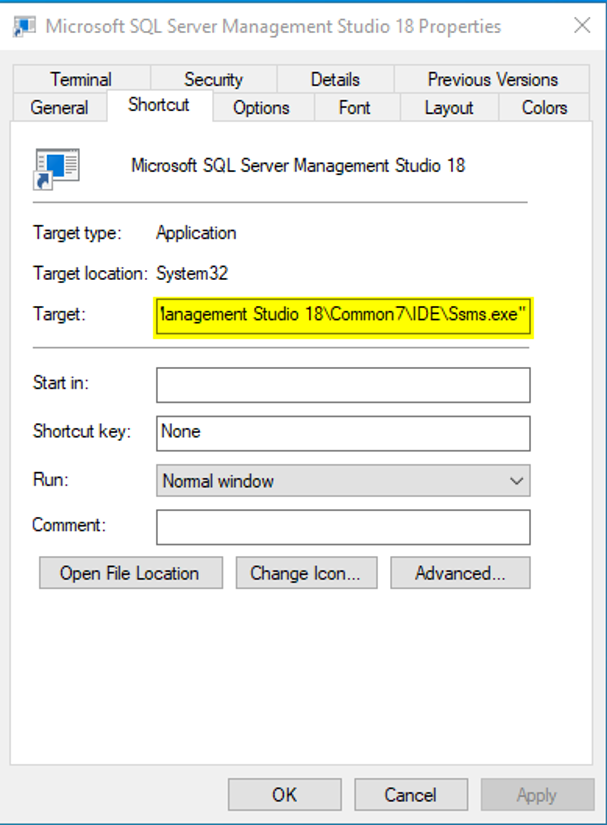

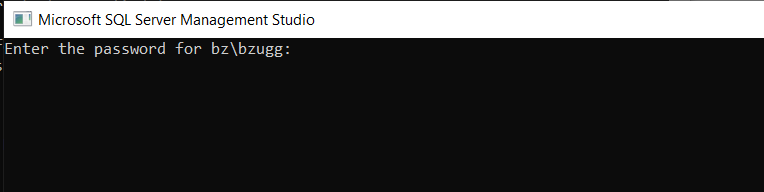

- SQL Installs

- Patching

- Anything that triggers an Alert and is fixed with a simple script

- Deployments (The great white whale of the DBA)

I plan to dive into these topics in more depth over time. What I want to illustrate is that while the prosecution is going overboard asking to get rid of the DBA in favor of automation, DBAs are going overboard in refusing to consider it. We need to admit that there are things better suited to automation, and agree to a bargain with the prosecution that meets in the middle. In the end the goal should be letting the DBA focus on bigger issues and reducing how many times they get called at night.